detecting user intention in human-robot interaction from non-verbal cues

This project was part of the elective 'ML for IOT' in my masters.

Supervisor

Jered Vroon

Collab

Group of 3

Details

Master Project

4 Month duration

At TU Delft

In times of the pandemic, robots offered a way to decrease health risk without running the risk of infecting themselves. This created the idea of using a disinfectant robot to approach people and encourage them to disinfect their hands more often.

The challenge is to identify in what ways the human communicates their intentions and feelings and how do we need to translate those signals so the robot knows what to do in both effective and socially acceptable ways. This is made even more challenging by the fact that different people respond in different ways. [1,2]

[1]IEEE Computer Society, & Institute of Electrical and Electronics Engineers. (n.d.). 2019 IEEE First International Conference on Cognitive Machine Intelligence : CogMI 2019 : proceedings : Los Angeles, California, USA, 12-14 December 2019.

[2]Vroon, J., Joosse, M., Lohse, M., Kolkmeier, J., Kim, J., Truong, K., Englebienne, G., Heylen, D., & Evers, V. (n.d.). Dynamics of Social Positioning Patterns in Group-Robot Interactions*. www.giraff.org

?

By probing interactions with a simplified robot mock-up we gained a preliminary understanding of the physical cues through which humans indicate their usage intention.

.png)

.png)

.png)

.png)

.png)

ignore the moving item by facing away

reach out to signal intention of use

turn away to signal no interest

.png)

Aiming to create a shared language between the robot and the human, we translate human gestures into data for the robot. Based on the first testings we create a preliminary 'dictionary':

To evaluate how well the language we identified -the features and labels we observed- are working in reality to communicate user intention to the robot, we need to collect real data.

We prepared a data collection setup with a wizard-of-oz prototype. The prototype is able to appear autonomously while actually being controlled by a person. With a 3D camera and a Jetson we captured the interactions and physical cues that the prototype evoked.

A total of 40 participants was recored.

%20(1).jpeg)

%20(1).jpeg)

Jetson

3D Camera (Zed 2)

Once the data were collected, the actual cues need to be extracted from the video material, mathematically described and saved into a dataset. My team colleague with experience in python programming was responsible for the high level feature extraction, where I assisted by writing small helper functions to described individual gestures such as head rotation. Because of the low amount of data (40 initial observations) no ML could be used to automate the feature extraction.

Low Level Extraction

With help of a ML script that ran in the background when collecting video data, we received information about 13 different points in space that were mapped on to the human bodies we recorded.

Data Cleaning

Some of the data required cleaning. Non-participating people in the recordings where removed, and the videos shortened to the important interactions. Where the mapping of the 13 points did not work due to e.g. long clothes, data where removed.

High Level Extraction

Finally the data were used to create a dataset containing the features we observed in previous interactions per person.

The final dataset was far from perfect. Primarily because of the low number of data points, especially compared to the high amount of features. The final dataset contained 25 participants, 5 features, 4 labels:

.png)

In order to evaluate how well the language we chose -the human gestures we captured in features and the intention in labels- is understood by the robot, I ran a exploratory data analysis as well as a few predictions via machine learning.

It was expected that the results are not perfect, primarily due to the small dataset we had available. Below the most important findings are presented, to see the whole code check out the pdf.

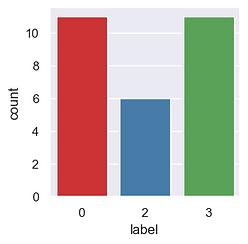

1. Check Label Distribution

In a first step the data are being plotted to investigate their distribution per label. This shows an un-balance. To solve that problem and to simplify the dataset, a new label is created by simply merging the old ones in all possible combinations.

2. Check Feature Distribution

Next, I try to gain a better understanding on how my features are distributed. This already indicates how well the gestures we observed predict a certain intention of use. The features however, show a high amount of overlap per label, indicating that they are poor predictors for the labels and thus the intention of use. Nonetheless, the features are not correlated, thus none of them is redundant.

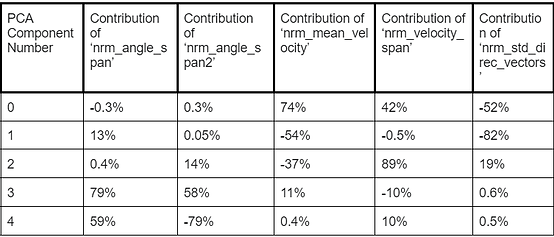

3. Principal Component Analysis

In order to further investigate and question our choice of features I ran a PCA. We don’t really have a dimensionality issue, but nonetheless quite a lot of predictors compared to the amount of data. We thus plan on using the PCA to find new feature variables to obtain lower-dimensional data while preserving as much of the data's variation as possible.

conclusion: Let’s first focus onPCA component 0, the first PCA component, since it already covers 50% of the data’s variance. We can read that the feature that showed the most interesting attributes and the least co-correlation is ‘nrm_mean_velocity’. To better understand the new PCA features I make a nother KDEplot:

Conclusion: To my surprise I don’t see a strong relationship between the variance covered in our features (which is high in our first and second pca component) and their use for predicting our labels. This can be seen by the decrease of overlapping from pca component 1 to pca component 5.

4. Prediction Tests with Classification

I aim to use classification to investigate the importance and relevance of our features for our labels. My focus is thereby on correct prediction of the labels that indicate potential usage of our hand dispenser. I would of course like to have good results for all the labels, but since we try to encourage people to use the hand dispenser, I would rather falsely approach a person that does not want to interact, then miss out on someone who was potentially interested.

Data preparation: First, I split my dataset into training and test data. I repeat the same for the PCA features.

Logistic Regression Classifier

Decision Tree Classifier

KNN Classifier

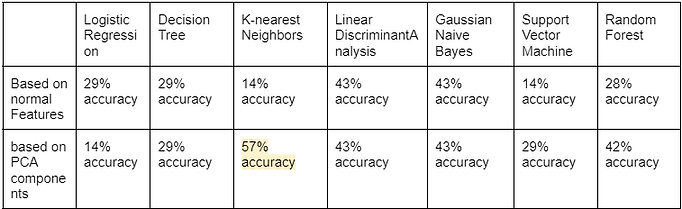

Summary of all classification results

Conclusion: I find the highest accuracy when performing the KNN algorithm on our PCA components with 57%. I find an average accuracy of 28,57% for our normal feature classifier, and of 36,71% on our PCA component trained classifier. Typically, the accuracy of a predictive model is good above 90% accuracy. We can thus see our models perform quite bad on our data. We could have reached similar results with smart guessing, based on the label that has the highest density of data. We also find our best PCA based classifier to outperform all our normal feature based classifiers. Not too surprising, since the PCA components showed slightly more independence in the KDE plot.

The quality of the shared language:

As this project proves, already the simplest interactions between human and machine can be quite complex. A new shared language needs to be created that both entities can understand. That requires good observations and prototyping of interactions in order to identify effective non-verbal human gestures. The ML analysis showed clearly that a lot of data are required to then properly translate those human cues into actionable data for the robot.

Data improvements for the future:

Design (robot embodiement) improvements for the future:

This web-page only illustrated relevant parts of the project that I performed myself. To see the full project report and documentation check out the button below.